Stat-Ease Blog

Categories

Strategy of Experiments for Formulations: Try Screening First!

Consider Screening Down Components to a Vital Few Before Studying Them In-Depth

At the outset of my chemical engineering career, I spent 2 years working with various R&D groups for a petroleum company in Southern California. One of my rotations brought me to their tertiary oil-recovery lab, which featured a wall of shelves filled to the brim with hundreds of surfactants. It amazed me how the chemist would seemingly know just the right combination of anionic, nonionic, cationic and amphoteric varieties to blend for the desired performance. I often wondered, though, whether empirical screening might have paid off by revealing a few surprisingly better ingredients. Then after settling in on the vital few components doing an in-depth experiment may very well have led to discovery of previously unknown synergisms. However, this was before the advent of personal computers and software for mixture design of experiments (DOE), and, thus, extremely daunting for non-statisticians.

Nowadays I help many formulators make the most from mixture DOE via Stat-Ease softwares’ easy-to-use statistical tools. I was very encouraged to see this 2021 meta-analysis that found 200 or so recent publications (2016-2020) demonstrating the successful application of mixture DOE for food, beverage and pharmaceutical formulation development. I believe that this number can be multiplied many-fold to extrapolate these findings to other process industries—chemicals, coatings, cosmetics, plastics, and so forth. Also, keep in mind that most successes never get published—kept confidential until patented.

However, though I am very heartened by the widespread adoption of mixture DOE, screening remains underutilized based on my experience and a very meager yield of publications from 2016 to present from a Google-Scholar search. I believe the main reasons to be:

- Formulators prefer to rely on their profound knowledge of the chemistry for selection of ingredients (refer to my story about surfactants for tertiary oil recovery)

- The number of possibilities get overwhelming; for example, this 2016 Nature publication reports that experimenters on a pear cell suspension culture got thrown off by the 65 blends they believed were required for simplex screening of 20 components (too bad, as shown in the Stat-Ease software screenshot below, by cutting out the optional check blends and constraint-plane-centroids, this could be cut back to substantially.)

- Misapplying factorial screening to mixtures, which, unfortunately happens a lot due to these process-focused experiments being simpler and more commonly used. This is really a shame as pointed out in this Stat-Ease blog post

I feel sure that it pays to screen down many components to a vital few before doing an in-depth optimization study. Stat-Ease software provides some great options for doing so. Give screening a try!!

For more details on mixture screening designs and a solid strategy of experiments for optimizing formulations, see my webinar on Strategy of Experiments for Optimal Formulation. If you would like to speak with our team about putting mixture DOE to good use for your R&D, please contact us.

Wrap-Up: Thanks for a great 2022 Online DOE Summit!

Thank you to our presenters and all the attendees who showed up to our 2022 Online DOE Summit! We're proud to host this annual, premier DOE conference to help connect practitioners of design of experiments and spread best practices & tips throughout the global research community. Nearly 300 scientists from around the world were able to make it to the live sessions, and many more will be able to view the recordings on the Stat-Ease YouTube channel in the coming months.

Due to a scheduling conflict, we had to move Martin Bezener's talk on "The Latest and Greatest in Design-Expert and Stat-Ease 360." This presentation will provide a briefing on the major innovations now available with our advanced software product, Stat-Ease 360, and a bit of what's in store for the future. Attend the whole talk to be entered into a drawing for a free copy of the book DOE Simplified: Practical Tools for Effective Experimentation, 3rd Edition. New date and time: Wednesday, October 12, 2022 at 10 am US Central time.

Even if you registered for the Summit already, you'll need to register for the new time on October 12. Click this link to head to the registration page. If you are not able to attend the live session, go to the Stat-Ease YouTube channel for the recording.

Want to be notified about our upcoming live webinars throughout the year, or about other educational opportunities? Think you'll be ready to speak on your own DOE experiences next year? Sign up for our mailing list! We send emails every month to let you know what's happening at Stat-Ease. If you just want the highlights, sign up for the DOE FAQ Alert to receive a newsletter from Engineering Consultant Mark Anderson every other month.

Thank you again for helping to make the 2022 Online DOE Summit a huge success, and we'll see you again in 2023!

Randomization Done Right

Randomization is essential for success with planned experimentation (DOE) to protect factor effects against bias by lurking variables. For example, consider the 8-run, two-level factorial design shown in Table 1. It lays out the low (−) and high (+) coded levels of each factor in standard, not random, order. Notice that factor C changes level only once throughout the experiment—first being set at the low (minus) level for four runs, followed by the remaining four runs set at the high (plus) level. Now, let’s say that the humidity in the room increases throughout the day—affecting the measured response. Since the DOE runs are not randomized, the change in humidity biases the calculated effect of the non-randomized factor C. Therefore, the effect of factor C includes the humidity change – it is no longer purely due to the change from low to high. This will cause analysis problems!

Table 1: Standard order of 8-run design

Randomization itself presents some problems. For example, one possible random order is the classic standard layout, which, as you now know, does not protect against time-related effects. If this unlikely pattern, or other non-desirable patterns are seen, then you should re-randomize the runs to reduce the possibility of bias from lurking variables.

Randomizing center points or other replicates

Replicates, such as center points, are used to collect information on the pure error of the system. To optimize the validity of this information, center points should be spaced out over the experimental run order. Random order may inadvertently place replicates in sequential order. This requires manual intervention by the researcher to break up or separate the repeated runs so that each run is completed independently of the matching run.

In both Design-Expert® software and Stat-Ease 360 you can re-randomize by right-clicking on the Run column header and selecting Randomize, as shown in Figure 1. You can also simply edit the Run order and swap two runs by changing the run numbers manually. This is often the easiest method when you want to separate center points, for example.

Figure 1: Right-click to Randomize

When Randomization Doesn’t Work

While randomization is ideal statistically, sometimes it is cumbersome in practice. For instance, temperature can take a very long time to change, so completely randomizing the runs may cause the experiment to go way beyond the time budget. In this case, researchers look for ways to reduce the complete randomization of the design.

I want to highlight a common DOE mistake. An incorrect way to restrict the randomization is to use blocks. Blocking is a statistical technique that groups the experimental runs to eliminate a potential source of variation from the data analysis. A common blocking factor is “day”, setting the block groups to eliminate day-to-day variation. Although this is a form of restricting randomization, if you block on an experimental factor like temperature, then statistically the block (temperature) effect will be removed from the analysis. Any interaction effect with that block will also be removed. The removal of this key effect very likely destroys the entire analysis! Blocking is not a useful method for restricting the randomization of a factor that is being studied in the experiment. For more information on why you would block, see “Blocking: Mowing the Grass in Your Experimental Backyard”.

If factor changes need to be restricted (not fully randomized), then building a split-plot design is the best way to go. A split-plot design takes into account the hard-to-change versus easy-to-change factors in a restricted randomization test plan. Perfect! The associated analysis properly assesses the differences in variation between these two groups of factors and provides the correct effect evaluation. The statistical analysis is a bit more complex, but good DOE software will handle it easily. Split-plot designs are a more complex topic, but commonly used in today’s experimental practices. Learn more about split-plot designs in this YouTube video: Split Plot Pros and Cons – Dealing with a Hard-to-Change Factor.

Wrapping up

Randomization is essential for valid and unbiased factor effect calculations, which is central to effective design of experiments analysis. It is up to the experimenter to ensure that the randomization of the experimental runs meets the DOE goals. Manual intervention may be required to separate any replicated points, such as center points. If complete randomization is not possible from a practical standpoint, build a split-plot design that statistically accounts for those restrictions.

2022 Online DOE Summit

Register now for the 2022 Online DOE Summit!

We're excited to host the upcoming 2022 Online DOE Summit, the premier conference on practical applications of industrial design of experiments (DOE). This year, we have 13 speakers giving talks on a variety of industry-based DOE practices and case studies. Join us on October 4-6 for these enlightening presentations, and learn how you can use DOE to make breakthrough improvements in your processes and products.

Some highlights this year:

- Stat-Ease President Martin Bezener will discuss when, how, and why to use mixture designs

- Geneticist Randall Niedz will showcase five examples of how DOE can be used in horticulture, plant breeding, and genetic applications

- Researchers JoAnn Coleman (pharma) and Jay Davies (military) will discuss implementation and applications of DOE within their business units

Registration for our Summit is absolutely FREE! These talks are given as webinars, and will all be recorded for posterity on our YouTube channel, Statistics Made Easy by Stat-Ease. Technical professionals from around the globe are invited to attend the live in-person sessions via GotoWebinar, or plan to watch the on-demand recordings after the conference.

Here's a quick collection of links to our Summit pages & information:

We look forward each year to bringing expert DOE professionals to our Online DOE Summit so our software users can learn tips, tricks, and strategies from one another. This conference is open to anyone who wants to attend, so please share this event with anyone you think might be interested.

See you in October!

Blocking: Mowing the Grass in Your Experimental Backyard

One challenge of running experiments is controlling the variation from process, sampling and measurement. Blocking is a statistical tool used to remove the variation coming from uncontrolled variables that are not part of the experiment. When the noise is reduced, the primary factor effects are estimated more easily, which allows the system to be modeled more precisely.

For example, an experiment may contain too many runs to be completed in just one day. However, the process may not operate identically from one day to the next, causing an unknown amount of variation to be added to the experimental data. By blocking on the days, the day-to-day variation is removed from the data before the factor effects are calculated. Other typical blocking variables are raw material batches (lots), multiple “identical” machines or test equipment, people doing the testing, etc. In each case the blocking variable is simply a resource required to run the experiment-- not a factor of interest.

Blocking is the process of statistically splitting the runs into smaller groups. The researcher might assume that arranging runs into groups randomly is ideal - we all learn that random order is best! However, this is not true when the goal is to statistically assess the variation between groups of runs, and then calculate clean factor effects. Design-Expert® software splits the runs into groups using statistical properties such as orthogonality and aliasing. For example, a two-level factorial design will be split into blocks using the same optimal technique used for creating fractional factorials. The design is broken into parts by using the coded pattern of the high-order interactions. If there are 5 factors, the ABCDE term can be used. All the runs with “-” levels of ABCDE are put in the first block, and the runs with “+“ levels of ABCDE are put in the second block. Similarly, response surface designs are also blocked statistically so that the factor effects can be estimated as cleanly as possible.

Blocks are not “free”. One degree of freedom (df) is used for each additional block. If there are no replicates in the design, such as a standard factorial design, then a model term may be sacrificed to filter out block-by-block variation. Usually these are high-order interactions, making the “cost” minimal.

After the experiment is completed, the data analysis begins. The first line in the analysis of variance (ANOVA) will be a Block sum of squares. This is the amount of variation in the data that is due to the block-to-block differences. This noise is removed from the total sum of squares before any other effects are calculated. Note: Since blocking is a restriction on the randomization of the runs, this violates one of the ANOVA assumptions (independent residuals) and no F-test for statistical significance is done. Once the block variation is removed, the model terms can be tested against a smaller residual error. This allows factor effects to stand out more, strengthening their statistical significance.

Example showing the advantage of blocking:

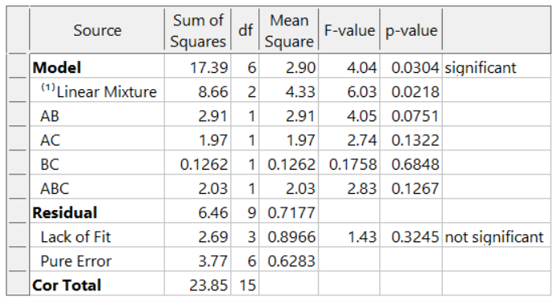

In this example, a 16-blend mixture experiment aimed at fitting a special-cubic model is completed over 2 days. The formulators expect appreciable day-to-day variation. Therefore, they build a 16-run blocked design (8-runs per day). Here is the ANOVA:

The adjusted R² = 0.8884 and the predicted R² = 0.7425. Due to the blocking, the day-to-day variation (sum of squares of 20.48) is removed. This increases the sensitivity of the remaining tests, resulting in an outstanding predictive model!

What if these formulators had not thought of blocking and, instead, simply, run the experiment in a completely randomized order over two days? The ANOVA (again for the designed-for special-cubic mixture model) now looks like this:

The model is greatly degraded, with adjusted R² = 0.5487, and predicted R² = 0.0819 and includes many insignificant terms. While the blocked model shown above explains 74% of the variation in predictions (the predicted R-Square), the unblocked model explains only 8% of the variation in predictions, leaving 92% unexplained. Due to the randomization of the runs, the day-to-day variation pollutes all the effects, thus reducing the prediction ability of the model.

Conclusion:

Blocks are like an insurance policy – they cost a little, and often aren’t required. However, when they are needed (block differences large) they can be immensely helpful for sorting out the real effects and making better predictions. Now that you know about blocking, consider whether it is needed to make the most of your next experiment.

Blocking FAQs:

How many runs should be in a block?

My rule-of-thumb is that a block should have at least 4 runs. If the block size is smaller, then don’t use blocking. In that case, the variable is simply another source of variation in the process.

Can I block a design after I’ve run it?

You cannot statistically add blocks to a design after it is completed. This must be planned into the design at the building stage. However, Design-Expert has sophisticated analysis tools and can analyze a block effect even if it was not done perfectly (added to the design after running the experiment). In this case, use Design Evaluation to check the aliasing with the blocks, watching for main effects or two-factor interactions (2FI).

Are there any assumptions being made about the blocks?

There is an assumption that the difference between the blocks is a simple linear shift in the data, and that this variable does not interact with any other variable.

I want to restrict the randomization of my factor because it is hard to change the setting with every run. Can I use blocking to do this?

No! Only block on variables that you are not studying. If you need to restrict the randomization of a factor, consider using a split-plot design.