Stat-Ease Blog

Categories

Hear Ye, Hear Ye: A Response Surface Method (RSM) Experiment on Sound Produces Surprising Results

A few years ago, while evaluating our training facility in Minneapolis, I came up with a fun experiment that demonstrates a great application of RSM for process optimization. It involves how sound travels to our students as a function of where they sit. The inspiration for this experiment came from a presentation by Tom Burns of Starkey Labs to our 5th European DOE User Meeting. As I reported in our September 2014 Stat-Teaser, Tom put RSM to good use for optimizing hearing aids.

Background

Classroom acoustics affect speech intelligibility and thus the quality of education. The sound intensity from a point source decays rapidly by distance according to the inverse square law. However, reflections and reverberations create variations by location for each student—some good (e.g., the Whispering Gallery at Chicago Museum of Science and Industry—a very delightful place to visit, preferably with young people in tow), but for others bad (e.g., echoing). Furthermore, it can be expected to change quite a bit from being empty versus fully occupied. (Our then-IT guy Mike, who moonlights as a sound-system tech, called these—the audience, that is—“meat baffles”.)

Sound is measured on a logarithmic scale called “decibels” (dB). The dBA adjusts for varying sensitivities of the human ear.

Frequency is another aspect of sound that must be taken into account for acoustics. According to Wikipedia, the typical adult male speaks at a fundamental frequency from 85 to 180 Hz. The range for a typical adult female is from 165 to 255 Hz.

Procedure

Stat-Ease training room at one of our old headquarters—sound test points spotted by yellow cups.

This experiment sampled sound on a 3x3 grid from left to right (L-R, coded -1 to +1) and front to back (F-B, -1 to +1)—see a picture of the training room above for location—according to a randomized RSM test plan. A quadratic model was fitted to the data, with its predictions then mapped to provide a picture of how sound travels in the classroom. The goal was to provide acoustics that deliver just enough loudness to those at the back without blasting the students sitting up front.

Using sticky notes as markers (labeled by coordinates), I laid out the grid in the Stat-Ease training room across the first 3 double-wide-table rows (4th row excluded) in two blocks:

- 2² factorial (square perimeter points) with 2 center points (CPs).

- Remainder of the 32 design (mid-points of edges) with 2 additional CPs.

I generated sound from the Online Tone Generator at 170 hertz—a frequency chosen to simulate voice at the overlap of male (lower) vs female ranges. Other settings were left at their defaults: mid-volume, sine wave. The sound was amplified by twin Dell 6-watt Harman-Kardon multimedia speakers, circa 1990s. They do not build them like this anymore 😉 These speakers reside on a counter up front—spaced about a foot apart. I measured sound intensity on the dBA scale with a GoerTek Digital Mini Sound Pressure Level Meter (~$18 via Amazon).

Results

I generated my experiment via the Response Surface tab in Design-Expert® software (this 3³ design shows up under "Miscellaneous" as Type "3-level factorial"). Via various manipulations of the layout (not too difficult), I divided the runs into the two blocks, within which I re-randomized the order. See the results tabulated below.

| Block | Run | Space Type | Coordinate (A: L-R) | Coordinate (B: F-B) | Sound (dBA) |

|---|---|---|---|---|---|

| 1 | 1 | Factorial | -1 | 1 | 70 |

| 1 | 2 | Center | 0 | 0 | 58 |

| 1 | 3 | Factorial | 1 | -1 | 73.3 |

| 1 | 4 | Factorial | 1 | 1 | 62 |

| 1 | 5 | Center | 0 | 0 | 58.3 |

| 1 | 6 | Factorial | -1 | -1 | 71.4 |

| 1 | 7 | Center | 0 | 0 | 58 |

| 2 | 8 | CentEdge | -1 | 0 | 64.5 |

| 2 | 9 | Center | 0 | 0 | 58.2 |

| 2 | 10 | CentEdge | 0 | 1 | 61.8 |

| 2 | 11 | CentEdge | 0 | -1 | 69.6 |

| 2 | 12 | Center | 0 | 0 | 57.5 |

| 2 | 13 | CentEdge | 1 | 0 | 60.5 |

Notice that the readings at the center are consistently lower than around the edge of the three-table space. So, not surprisingly, the factorial model based on block 1 exhibits significant curvature (p<0.0001). That leads to making use of the second block of runs to fill out the RSM design in order to fit the quadratic model. I was hoping things would play out like this to provide a teaching point in our DOESH class—the value of an iterative strategy of experimentation.

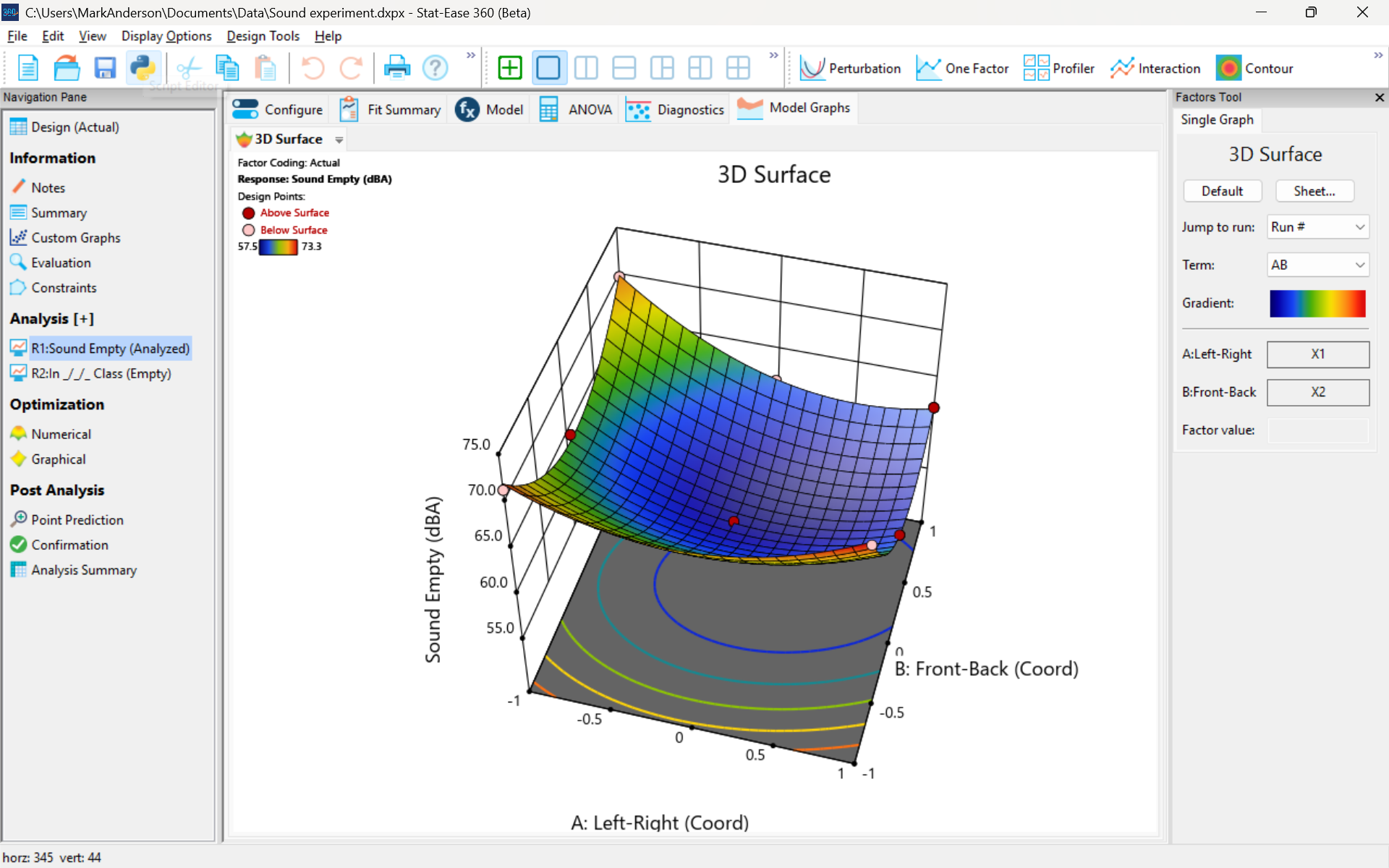

The 3D surface graph shown below illustrates the unexpected dampening (cancelling?) of sound at the middle of our Stat-Ease training room.

3D surface graph of sound by classroom coordinate.

Perhaps this sound ‘map’ is typical of most classrooms. I suppose that it could be counteracted by putting acoustic reflectors overhead. However, the minimum loudness of 57.4 (found via numeric optimization and flagged over the surface pictured) is very audible by my reckoning (having sat in that position when measuring the dBA). It falls within the green zone for OSHA’s decibel scale, as does the maximum of 73.6 dBA, so all is good.

What next

The results documented here came from an empty classroom. I would like to do it again with students (aka meat baffles) present. I wonder how that will affect the sound map. Of course, many other factors could be tested. For example, Rachel from our Front Office team suggested I try elevating the speakers. Another issue is the frequency of sound emitted. Furthermore, the oscillation can be varied—sine, square, triangle and sawtooth waves could be tried. Other types of speakers would surely make a big difference.

What else can you think of to experiment on for sound measurement? Let me know.

October Publication Roundup

Here's the latest Publication Roundup! In these monthly posts, we'll feature recent papers that cited Design-Expert® or Stat-Ease® 360 software. Please submit your paper to us if you haven't seen it featured yet!

Featured Article

Green extraction of poplar type propolis: ultrasonic extraction parameters and optimization via response surface methodology

BMC Chemistry, 19, Article number: 266 (2025)

Authors: Milena Popova, Boryana Trusheva, Ralitsa Chimshirova, Hristo Petkov, Vassya Bankova

Mark's comments: A worthy application of response surface methods for optimizing an environmentally friendly process producing valuable bioactive compounds. I see they used Box-Behnken designs appropriately - good work!

Be sure to check out this important study, and the other research listed below!

More new publications from October

- Development and evaluation of a battery powered harvester for sustainable leafy vegetable cultivation

Scientific Reports, volume 15, Article number: 33812 (2025)

Authors: Kalluri Praveen, Yenikapalli Anil Kumar, Atul Kumar Shrivastava - Rutin/ZnO/mesoporous Silica-based Nano-hydrogel accelerated topical wound healing in albino mice via potential synergistic bioactive response

European Journal of Pharmaceutics and Biopharmaceutics, Volume 216, November 2025, 114875

Authors: Huma Butt, Haji Muhammad Shoaib Khan, Muhammad Sohail, Amina Izhar, Farhan Siddique, Maryam Bashir, Usman Aftab, Hasnain Shaukat - Production of improved Ethiopian Tej using mixed lactic acid bacteria and yeast starter cultures

Scientific Reports, volume 15, Article number: 33460 (2025)

Authors: Ketemaw Denekew, Fitsum Tigu, Dagim Jirata Birri, Mogessie Ashenafi, Feng-Yan Bai, Asnake Desalegn - Design and Optimization of Trastuzumab-Functionalized Nanolipid Carriers for Targeted Capecitabine Delivery: Anti-Cancer Effectiveness Evaluation in MCF-7 and SKBR3 Cells

International Journal of Nanomedicine, Volume 2025:20 Pages 12075—12102, 3 October 2025

Authors: Shubhashree Das, Bhabani Sankar Satapathy, Gurudutta Pattnaik, Sovan Pattanaik, Yahya Alhamhoom, Mohamed Rahamathulla, Mohammed Muqtader Ahmed, Ismail Pasha - **Design and test analysis of a rotary cutter device for root cutting of golden needle mushroom

Scientific Reports, volume 15, Article number: 37219 (2025)

Authors: Limin Xie, Yuxuan Gao, Zhiqiang Lin, Feifan He, Wenxin Duan, Dapeng Ye - Central composite design optimized fluorescent method using dual doped graphene quantum dots for lacosamide determination in biological samples

Scientific Reports, volume 15, Article number: 36507 (2025)

Authors: Ahmed Serag, Rami M. Alzhrani, Reem M. Alnemari, Maram H. Abduljabbar, Atiah H. Almalki - Innovation in functional bakery products: formulation and analysis of moringa-fortified millet cookies

Journal of Food Measurement and Characterization, Published: 17 October 2025

Authors: Anshu, Neeru & Ashwani Kumar - Improving the efficacy and targeting of letrozole for the control of breast cancer: in vitro and in vivo studies

Naunyn-Schmiedeberg's Archives of Pharmacology, Published: 13 October 2025

Authors: Shahira F. El Menshawe, Seif E. Ahmed, Amr Gamal Fouad, Amira H. Hassan - Optimization and Evaluation of Functionally Engineered Paliperidone Nanoemulsions for Improved Brain Delivery via Nasal Route

Molecular Pharmaceutics, Published October 7, 2025

Authors: Niserga D. Sawant, Pratima A. Tatke, Namita D. Desai - Innovative inhalable dry powder: nanoparticles loaded with Crizotinib for targeted lung cancer therapy

BMC Cancer, volume 25, Article number: 1526 (2025)

Authors: Faiza Naureen, Yasar Shah, Maqsood Ur Rehman, Fazli Nasir Fazli Nasir, Abdul Saboor Pirzada, Jamelah Saleh Al-Otaibi, Maria Daglia, Haroon Khan

September Publication Roundup

Here's the latest Publication Roundup! In these monthly posts, we'll feature recent papers that cited Design-Expert® or Stat-Ease® 360 software. Please submit your paper to us if you haven't seen it featured yet!

When choosing a featured article for each month, we try to make sure it's available for everyone to read. Unfortunately, none of this month's publications that met our standards are available to the public, so there's no featured article this month. We still recommend checking out the incredible research done by these teams, and congratulations to everyone for publishing!

New publications from September

- Evaluating the efficacy of nintedanib-invasomes as a therapy for non-small cell lung cancer

European Journal of Pharmaceutics and Biopharmaceutics, Volume 214, September 2025, 114810

Authors: Tamer Mohamed Mahmoud, Mohamed AbdElrahman, Mary Eskander Attia, Marwa M. Nagib, Amr Gamal Fouad, Amany Belal, Mohamed A.M. Ali, Nisreen Khalid Aref Albezrah, Shatha Hallal Al-Ziyadi, Sherif Faysal Abdelfattah Khalil, Mary Girgis Shahataa, Dina M. Mahmoud - Optimization of fermentation conditions for bioethanol production from oil palm trunk sap

Journal of the Indian Chemical Society, Volume 102, Issue 9, September 2025, 101943

Authors: Abdul Halim Norhazimah, Teh Ubaidah Noh, Siti Fatimah Mohd Noor - Microwave-assisted modification of a solid epoxy resin with a Peruvian oil

Progress in Organic Coatings, Volume 206, September 2025, 109333

Authors: Daniel Obregón, Antonella Hadzich, Lunjakorn Amornkitbamrung, G. Alexander Groß, Santiago Flores

Authors: Hüsniye Hande Aydın, Esra Karataş, Zeynep Şenyiğit, Hatice Yeşim Karasulu - Development of an innovative method of Salmonella Typhi biofilm quantification using tetrahydrofuran and response surface methodology

Microbial Pathogenesis, Volume 208, November 2025, 107992

Authors: Aditya Upadhyay, Dharm Pal, Awanish Kumar - Bauhinia monandra derived mesoporous activated carbon for the efficient adsorptive removal of phenol from wastewater

Scientific Reports volume 15, Article number: 31790 (2025)

Authors: Bhojaraja Mohan, Chikmagalur Raju Girish, Gautham Jeppu, Praveengouda Patil - Quality by Design-Driven Development, Greenness, and Whiteness Assessment of a Robust RP-HPLC Method for Simultaneous Quantification of Ellagic, Sinapic, and Syringic Acids

Separation Science Plus, Volume 8, Issue 9, September 2025, e70126

Authors: V. S. Mannur, Rahul Koli, Atith Muppayyanamath - Dissolution and separation of carbon dioxide in biohydrogen by monoethanolamine-based deep eutectic solvents

Journal of Chemical Technology and Biotechnology, Early View, 12 September 2025

Authors: Xiaokai Zhou, Yanyan Jing, Cunjie Li, Quanguo Zhang, Yameng Li, Tian Zhang, Kai Zhang - Strategic Implementation of Analytical Quality by Design in RP-HPLC Method Development for Andrographis paniculata and Chrysopogon zizanioides Extract-Loaded Phytosomes

Separation Science Plus, Volume 8, Issue 9, September 2025, e70125

Authors: Abisesh Muthusamy, Vinayak Mastiholimath, Darasaguppe R. Harish, Atith Muppayyanamath, Rahul Koli - Improving the bioavailability and therapeutic efficacy of valsartan for the control of cardiotoxicity-associated breast cancer

Journal of Drug Targeting, Published online: 29 Sep 2025

Authors: Mary Eskander Attia, Fatma I. Abo El-Ela, Saad M. Wali, Amr Gamal Fouad, Amany Belal, Fahad H. Baali, Nisreen Khalid Aref Albezrah, Mohammed S. Alharthi, Marwa M. Nagib

August Publication Roundup

Here's the latest Publication Roundup! In these monthly posts, we'll feature recent papers that cited Design-Expert® or Stat-Ease® 360 software. Please submit your paper to us if you haven't seen it featured yet!

Featured Article

Design and optimization of imageable microspheres for locoregional cancer therapy

Scientific Reports volume 15, Article number: 27487 (2025)

Authors: Brenna Kettlewell, Andrea Armstrong, Kirill Levin, Riad Salem, Edward Kim, Robert J. Lewandowski, Alexander Loizides, Robert J. Abraham, Daniel Boyd

Mark's comments: This is a great application of mixture design for optimal formulation of a medical-grade glass. The researchers used Stat-Ease software tools to improve the properties of microspheres to an extent that their use can be extended to cancers beyond the current application to those located in the liver. Well done!

Be sure to check out this important study, and the other research listed below!

More new publications from August

- Use of experimental design for screening and optimization of variables influencing photocatalytic degradation of pollutants in aqueous media: A review of chemometrics tools

Chemical Engineering Research and Design, Volume 220, August 2025, Pages 270-291

Authors: Pedro César Quero–Jiménez, Aracely Hernández–Ramírez, Jorge Luis Guzmán–Mar, Jorge Basilio de la Torre–López, Matheus Silva–Gigante, Laura Hinojosa–Reyes - Analytical Quality by Design-Based Stability-Indicating UHPLC Method for Determination of Inavolisib in Bulk and Formulation

Separation Science Plus, no. 8 (2025): 8, e70110

Authors: Ashwinkumar Matta, Raja Sundararajan - Enhanced anti-infective activities of sinapic acid through nebulization of lyophilized protransferosomes

Frontiers in Nanotechnology | Biomedical Nanotechnology, Volume 7 - 2025

Authors: Hani A. Alhadrami, Amr Gamal, Ngozi Amaeze, Ahmed M. Sayed, Mostafa E. Rateb, and Demiana M. Naguib - Optimizing Anti-Corrosive Properties of Polyester Powder Coatings Through Montmorillonite-Based Nanoclay Additive and Film Thickness

Corrosion and Materials Degradation, 2025, 6(3), 39

Authors: Marshall Shuai Yang, Chengqian Xian, Jian Chen, Yolanda Susanne Hedberg, James Joseph Noël - Regulatory mechanism and multi-index coordinated optimization of pipeline transportation performance of coarse-grained gangue slurry: Experimental and simulation investigation

Physics of Fluids 37, 073343 (2025)

Authors: Jianfei Xu (许健飞); Jixiong Zhang (张吉雄); Nan Zhou (周楠); Hao Yan (闫浩); Wenfu Zhou (周文福); Qian Chen (陈乾); Jiarun Chen (陈嘉润) - Optimization of clayey soil parameters with aeolian sand through response surface methodology and a desirability function

Scientific Reports volume 15, Article number: 30831 (2025)

Authors: Ghania Boukhatem, Messaouda Bencheikh, Mohammed Benzerara, Mehmet Serkan Kırgız, N. Nagaprasad, Krishnaraj Ramaswamy, Souhila Rehab-Bekkouche, R. Shanmugam - Development of electromagnetic drop weight release mechanism for human occupied vehicle

Scientific Reports volume 15, Article number: 30663 (2025)

Authors: Sathia Narayanan Dharmaraj, Karthikeyan Shanmugam, Jothi Chithiravel, Ramesh Sethuraman - Operating parameter optimization and experiment of spiral outer grooved wheel seed metering device based on discrete element method

Scientific Reports volume 15, Article number: 30762 (2025)

Authors: Tao Zhang, Xinglong Tang, Cong Dai, Guiying Ren - Parameter optimization of key components in seed-metering device for pre-cut seed stems of Pennisetum hydridum

Scientific Reports volume 15, Article number: 31318 (2025)

Authors: Chong Liu, Xiongfei Chen, Qiang Xiong, Muhua Liu, Junan Liu, Jiajia Yu, Peng Fang, Yihan Zhou, Chuanhong Zhan, Yao Xiao - Optimization of new and thermally aged natural monoesters blends for a sustainable management of power transformers

Industrial Crops and Products, Volume 235, 1 November 2025, 121741

Authors: Gerard Ombick Boyekong, Gabriel Ekemb, Emeric Tchamdjio Nkouetcha, Ghislain Mengata Mengounou, Adolphe Moukengue Imano

July Publication Roundup

Here's the latest Publication Roundup! In these monthly posts, we'll feature recent papers that cited Design-Expert® or Stat-Ease® 360 software. Please submit your paper to us if you haven't seen it featured yet!

Featured Articles

Microwave-assisted extraction of bioactive compounds from Urtica dioica using solvent-based process optimization and characterization

Scientific Reports volume 15, Article number: 25375 (2025)

Authors: Anjali Sahal, Afzal Hussain, Ritesh Mishra, Sakshi Pandey, Ankita Dobhal, Waseem Ahmad, Vinod Kumar, Umesh Chandra Lohani, Sanjay Kumar

Mark's comments: Kudos to this team for deploying a Box-Behnken response-surface-method design--convenient by only requiring 3 levels of each of their 3 factors (power, time and sample-to-solvent ratio)--to optimize their process. Given all the raw data I was able to easily copy it out and import it into my Stat-Ease software and check into the modeling--no major issues uncovered. The authors did well by diagnosing residuals and making use of our numerical optimization tools to find the most desirable factor combination for their multiple-response goals.

Be sure to check out this important study, and the other research listed below!

More new publications from July

- Improving the heterotrophic media of three Chlorella vulgaris mutants toward optimal color, biomass and protein productivity

Scientific Reports volume 15, Article number: 23325 (2025)

Authors: Mafalda Trovão, Miguel Cunha, Gonçalo Espírito Santo, Humberto Pedroso, Ana Reis, Ana Barros, Nádia Correia, Lisa Schüler, Monya Costa, Sara Ferreira, Helena Cardoso, Márcia Ventura, João Varela, Joana Silva, Filomena Freitas, Hugo Pereira - Calibration and establishment for the discrete element simulation parameters of pepper stem during harvest period

Scientific Reports volume 15, Article number: 21143 (2025)

Authors: Jiaxuan Yang, Jin Lei, Xinyan Qin, Zhi Wang, Jianglong Zhang, Lijian Lu - Design Expert Software Being Used to Explore the Factors Affecting the “Water Garden”

American Journal of Analytical Chemistry, 16, 107-116

Authors: Zelin Miu, Yichen Lu - Quality improvement of recycled carbon black from waste tire pyrolysis for replacing carbon black N330

Scientific Reports volume 15, Article number: 23726 (2025)

Authors: Tawan Laithong, Tarinee Nampitch, Peerapon Ourapeepon, Natacha Phetyim - Development and in vitro characterization of embelin bilosomes for enhanced oral bioavailability

Journal of Research in Pharmacy, Year 2025, Volume: 29 Issue: 4, 1616 - 1626, 05.07.2025

Authors: Shreya Firake Devanshi Pethani Jeet Patil Avinash Bhujbal Rahul Gondake Dhanashree Sanap , Sneha Agrawal - Performance optimization and mechanism research of C20 coal gangue concrete based on response surface and water resistance

AIP Advances, 15, 075316 (2025)

Authors: Yong Cui, Xiwen Yin, Qiuge Yu - Multi-objective optimization of boiler combustion efficiency and emissions using genetic algorithm and recurrent neural network in 660-MW coal-fired power plant

Eastern-European Journal of Enterprise Technologies, 3(8 (135), 23–33

Authors: Mohamad Arwan Efendy, Ahmad Syihan Auzani, Sholahudin Sholahudin - Optimization and characterization of polyhydroxybutyrate produced by Vreelandella piezotolerans using orange peel waste

Scientific Reports volume 15, Article number: 25873 (2025)

Authors: Mahmoud H. Hendy, Amr M. Shehabeldine, Amr H. Hashem, Ahmed F. El-Sayed, Hussein H El-Sheikh - Multi-functional electrodialysis process to treat hyper-saline reverse osmosis brine: producing high value-added HCl, NaOH and energy consumption calculation

Environmental Sciences Europe volume 37, Article number: 121 (2025)

Authors: Haia M. Elsayd, Gamal K. Hassan, Ahmed A. Affy, M. Hanafy, Tamer S. Ahmed - Quality by Design-Based Method for Simultaneous Determination of Glimepiride and Lovastatin in Self-Nano Emulsifying Drug Delivery System

Separation Science Plus, 8: e70097

Authors: Priyanka Paul, Raj Kamal, Thakur Gurjeet Singh, Ankit Awasthi, Rohit Bhatia - Sustainable Adsorbents for Wastewater Treatment: Template-Free Mesoporous Silica from Coal Fly Ash

Chemical Engineering & Technology, 48: e70077

Authors: Thapelo Manyepedza, Emmanuel Gaolefufa, Gaone Koodirile, Dr. Isaac N. Beas, Dr. Joshua Gorimbo, Bakang Modukanele, Dr. Moses T. Kabomo